1. Introduction: What Does “O2 Sensor 0 Days Remaining” Really Mean?

When operating a HACH HQ40d portable multi-parameter analyzer equipped with an LDO101 / LDO10101 dissolved oxygen (DO) probe, users may encounter the on-screen message:

“O2 Sensor 0 days remaining.”

This message often causes confusion among field engineers, laboratory technicians, and equipment resellers. It is frequently misinterpreted as a probe failure, instrument malfunction, or electronic defect. In reality, this message is not a fault code. It is a consumable lifetime notification.

The message indicates that the luminescent sensor cap installed on the LDO probe has reached the end of its manufacturer-defined service life.

2. LDO Technology: Why These Sensors Are Different

To understand this message, it is essential to distinguish LDO (Luminescent Dissolved Oxygen) sensors from traditional electrochemical DO electrodes.

2.1 Conventional DO electrodes

Traditional Clark-type electrodes rely on:

- Anode and cathode systems

- Electrolyte solution

- Oxygen-permeable membranes

They consume oxygen during measurement and are sensitive to flow rate, membrane condition, and electrolyte aging.

2.2 LDO optical dissolved oxygen sensors

HACH’s LDO probes operate using optical fluorescence quenching technology. Blue light excites a luminescent material inside the sensor cap. Dissolved oxygen molecules quench the fluorescence. The instrument measures changes in fluorescence lifetime or phase shift to calculate oxygen concentration.

In this design, the active sensing element is not the probe body, but the luminescent sensor cap at the tip.

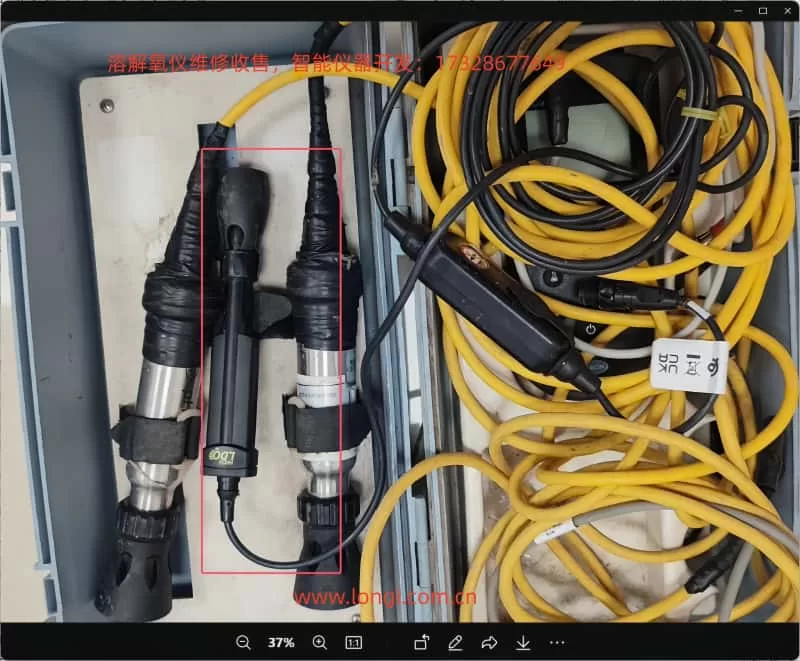

3. Physical Structure of an LDO101/LDO10101 Probe

An LDO probe can be functionally divided into three major sections:

- Probe body

- LED excitation source

- Photodetector

- Temperature sensor

- Signal processing electronics

- Luminescent sensor cap (consumable)

- Luminescent dye layer

- Oxygen diffusion layer

- Protective optical coating

- Integrated lifetime memory chip

- Cable and connector assembly

Only the sensor cap is subject to predictable chemical aging. The probe body itself is typically long-life.

4. Where Does the “Remaining Days” Value Come From?

Each genuine LDO sensor cap contains an internal memory device that stores:

- Manufacturing data

- Installation time

- Operating lifetime

- Calibration information

The HQ-series instruments periodically read this data and calculate the remaining validated service life. HACH specifies a typical service life of approximately one year for an LDO sensor cap.

When this counter reaches zero, the instrument displays:

“O2 Sensor 0 days remaining.”

This mechanism ensures data quality control and traceability rather than indicating immediate electrical failure.

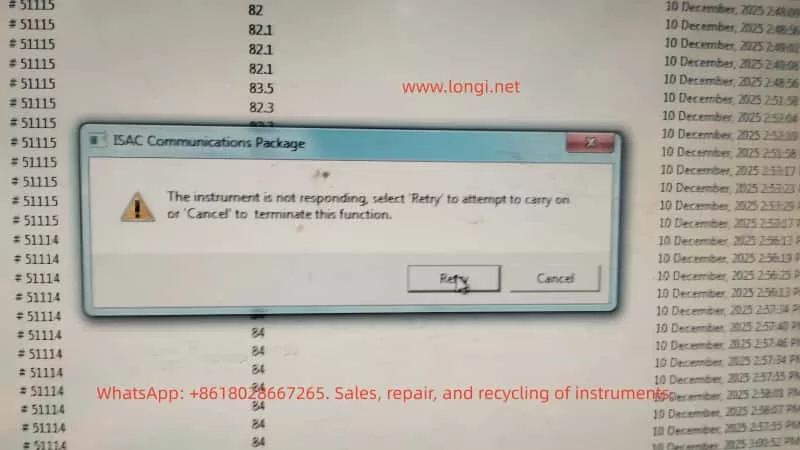

5. Is This a Malfunction?

From an engineering standpoint, the answer is clear:

No. This is not a hardware fault.

It does not indicate:

- Open or short circuits

- Optical module failure

- Communication errors

- Mainboard defects

- Loss of sensor detection

It indicates that the sensor cap has exceeded the period over which the manufacturer guarantees accuracy and response performance.

6. Can the Instrument Still Measure?

6.1 Functional perspective

In most firmware versions, the instrument will continue to display DO readings. The probe may still respond to oxygen changes.

However, after the luminescent material ages:

- Fluorescence intensity decreases

- Signal-to-noise ratio degrades

- Response time increases

- Temperature compensation accuracy declines

6.2 Engineering and compliance perspective

For regulated environments, laboratories, environmental monitoring projects, or contract testing, continued operation beyond the rated life is not acceptable. Measurement data may no longer meet quality or traceability requirements.

In such contexts, replacement of the sensor cap is mandatory.

7. Can the LDO10101 Sensor Cap Be Replaced?

Yes. The LDO system is designed around a replaceable sensor cap architecture.

The luminescent cap is a standard consumable component supplied by the manufacturer. Replacement does not require probe disassembly or electronic repair. Once a new cap is installed, the instrument automatically recognizes the new lifetime chip.

After replacement, the remaining life counter resets and the probe must be recalibrated.

8. Standard Replacement and Recovery Procedure

A professional maintenance workflow includes:

- Removing the expired sensor cap

- Installing a new genuine luminescent sensor cap

- Powering the instrument and verifying cap recognition

- Performing full dissolved oxygen calibration

- Air-saturated calibration or

- Water-saturated calibration

Calibration is essential because optical compensation coefficients are cap-specific.

9. Economic and Project-Level Considerations

Unlike traditional membrane kits, LDO sensor caps represent a higher-value consumable. Market pricing typically places them in the hundreds of US dollars per unit range.

This creates an important engineering reality:

The main operational cost of LDO dissolved oxygen probes is concentrated in the sensor cap, not in the probe body.

Therefore, during:

- Instrument procurement

- Maintenance planning

- Project bidding

- Second-hand equipment evaluation

the remaining sensor cap lifetime must be treated as a critical parameter.

10. Common Misdiagnoses in the Field

In service and resale environments, this message is often incorrectly interpreted as:

- Probe failure

- Instrument motherboard defects

- Software malfunction

- Optical module damage

Such misinterpretations frequently lead to unnecessary disassembly or replacement of functional hardware.

The correct diagnostic conclusion is always:

Consumable lifetime expiration, not electronic failure.

11. Implications for Service Engineers and Equipment Resellers

For technical service teams and secondary-market suppliers, the “0 days remaining” message provides immediate insight into the true maintenance status of a dissolved oxygen system.

An instrument showing this message should be classified as:

“Operational, but requiring consumable replacement before certified use.”

Failure to communicate this condition to end users may result in incorrect pricing, unexpected operating costs, or post-sale disputes.

12. Design Perspective: Why Manufacturers Use Lifetime-Managed Sensor Caps

The LDO approach delivers clear advantages:

- No oxygen consumption

- Reduced flow dependency

- Lower drift compared to electrochemical electrodes

- Simplified routine maintenance

However, these advantages require:

- Precisely formulated luminescent materials

- Strict optical stability control

- Integrated lifetime monitoring

Modern analytical instrumentation increasingly adopts this model: long-life core hardware combined with digitally managed consumables.

13. Conclusion

When a HACH HQ40d analyzer displays:

“O2 Sensor 0 days remaining,”

the engineering meaning is unequivocal:

The luminescent sensor cap on the LDO10101 dissolved oxygen probe has reached the end of its validated service life. The probe itself is not defective. Replacement of the sensor cap, followed by proper calibration, is the correct and complete solution.

This message represents a maintenance requirement, not a hardware failure.